Chapter 6 is about fine-tuning for classification.

The example used is building a spam classifier.

A spam classifier determines whether something is spam or not spam, so the output needs to be values like 0 and 1.

1. Steps of Fine-tuning

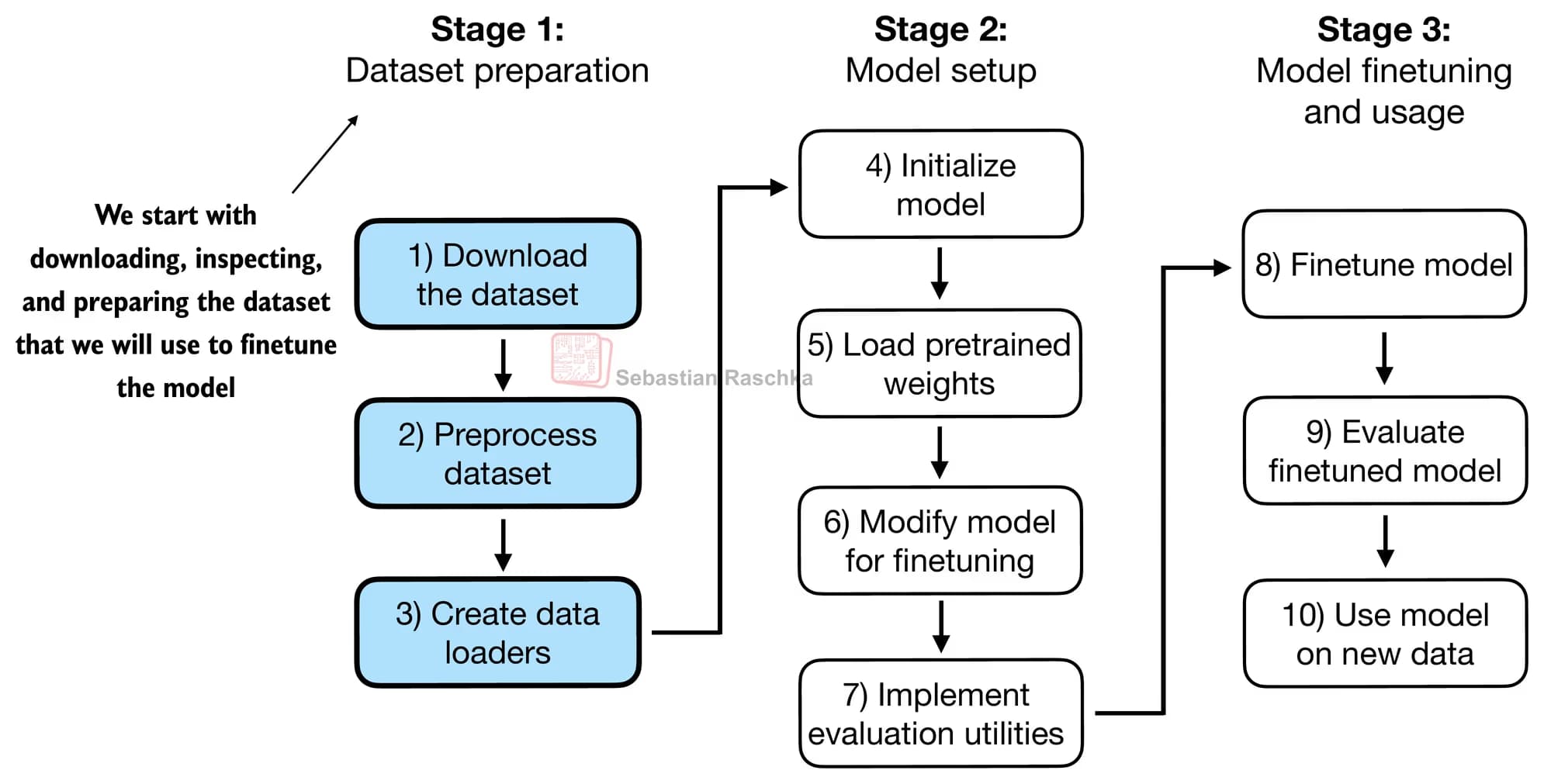

The fine-tuning process is similar to the process of training a model.

You prepare the dataset, load the weight values, then train and evaluate.

The slightly different part is that there is a step where the output layer is mapped to 0 (not spam) and 1 (spam).

Based on the final tensor, which contains the most information among the tensors produced, the model outputs whether the input is spam.

Finally, the loss is computed using cross-entropy.

2. Fine-tuning the Model with Supervised Learning Data

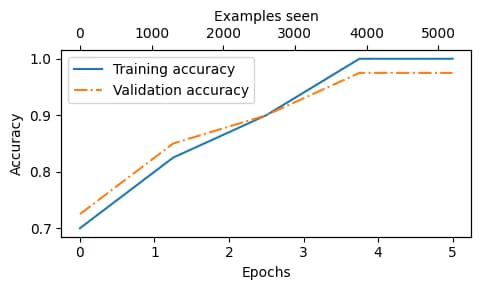

The data is split into training and validation sets, and the model is trained over multiple epochs.

When training accuracy and validation accuracy remain close, it means the model is showing similar accuracy during training and validation.

In other words, there are no signs of overfitting.

Now, using this, we can distinguish spam.

3. Thoughts

Running a 1.2B model is a stretch even on my Mac mini, but it makes me think that if possible, I’d like to train an LLM and try various things with it.

I’m even considering using this approach when I write a paper next year.

I should hurry up and finish the book, and then start learning PyTorch in earnest.

댓글을 불러오는 중...