Today is December 14.

The challenge period actually ended two weeks ago, but I couldn’t just give up on writing a review.

Because these TILs I leave like this eventually become real flesh and blood for me later.

I’ll try to focus more on the meaning than on the code itself.

1. Loss calculation of the model

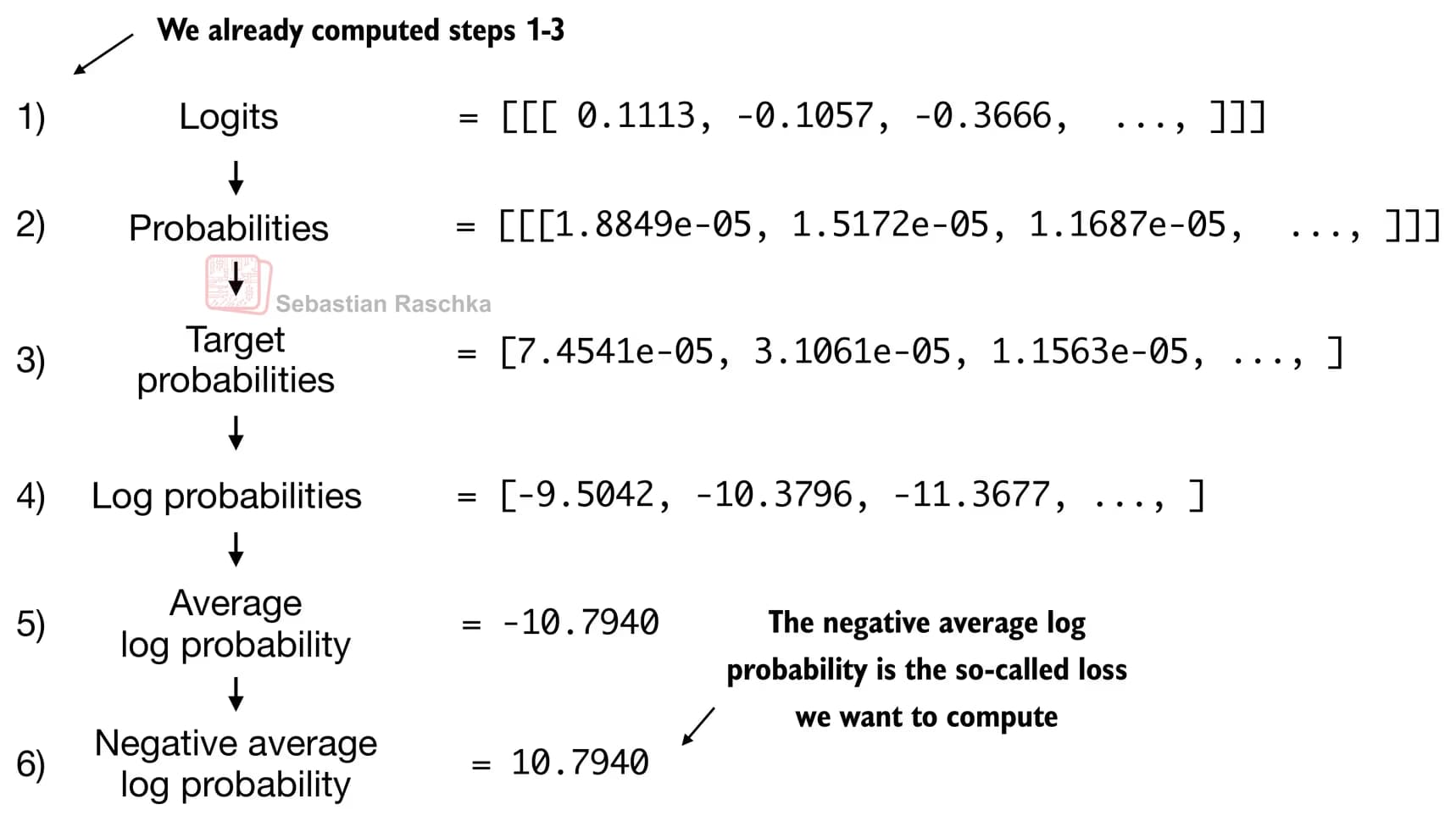

This part is about how to calculate the loss after building a GPT model.

Based on the input tokens, GPT computes the probabilities of the next token to be output.

Among these resulting probabilities, it keeps only a few tokens with the highest probabilities and then computes the cross-entropy loss after applying log, negation, etc.

Since cross-entropy loss directly measures how confident the model was about the correct answer as a negative value, the closer the average log probability is to 0, the more the loss decreases.

What I was curious about here was: we go through logits, probabilities, target token probabilities, log probabilities, average log probabilities, and negative average log probabilities—so why does the model’s accuracy increase?

How does this process actually increase the probability of the correct answer?

It turns out I didn’t really know what the logit function was.

Simply put, the model outputs a real-valued vector called logits, and then, through softmax, it converts these scores into a probability distribution by emphasizing their relative differences.

In this process, larger scores get larger probabilities, and smaller scores get probabilities that are almost 0.

And since probabilities are between 0 and 1, and log 1 = 0, the closer the log probability is to 0, the more likely it is that the model selects the correct token.

Therefore, loss is a signal that makes the model update its parameters so that the log probability of the correct token gets closer to 0.

2. LLM training and decoding strategies

Now, by repeating the process of evaluating the loss and updating the weights using everything we did earlier, we can train an LLM.

At this point, there were two strategies for inferring tokens close to the correct answer that I found impressive.

If the model always picks only the highest-probability tokens through greedy decoding, the responses lose diversity.

So we divide each logit by a numeric value (temperature) to give diversity to the responses.

This is called temperature scaling.

The other is top-k sampling, where the model selects the k tokens with the highest probabilities and then generates a response only from within that set.

3. Thoughts

Looking at the code, my eyes kept darting around because I had to grasp the mathematical concepts along with PyTorch, but once I understood it, it wasn’t that hard.

I struggled a bit because the loss formula I learned before and the way loss is calculated in LLMs are a bit different.

Still, I feel like I’ve come to understand it deeply enough that I could teach it to kids.

For the kids, too, I think it’d be better to focus less on the formulas themselves and more on helping them build real understanding.

댓글을 불러오는 중...